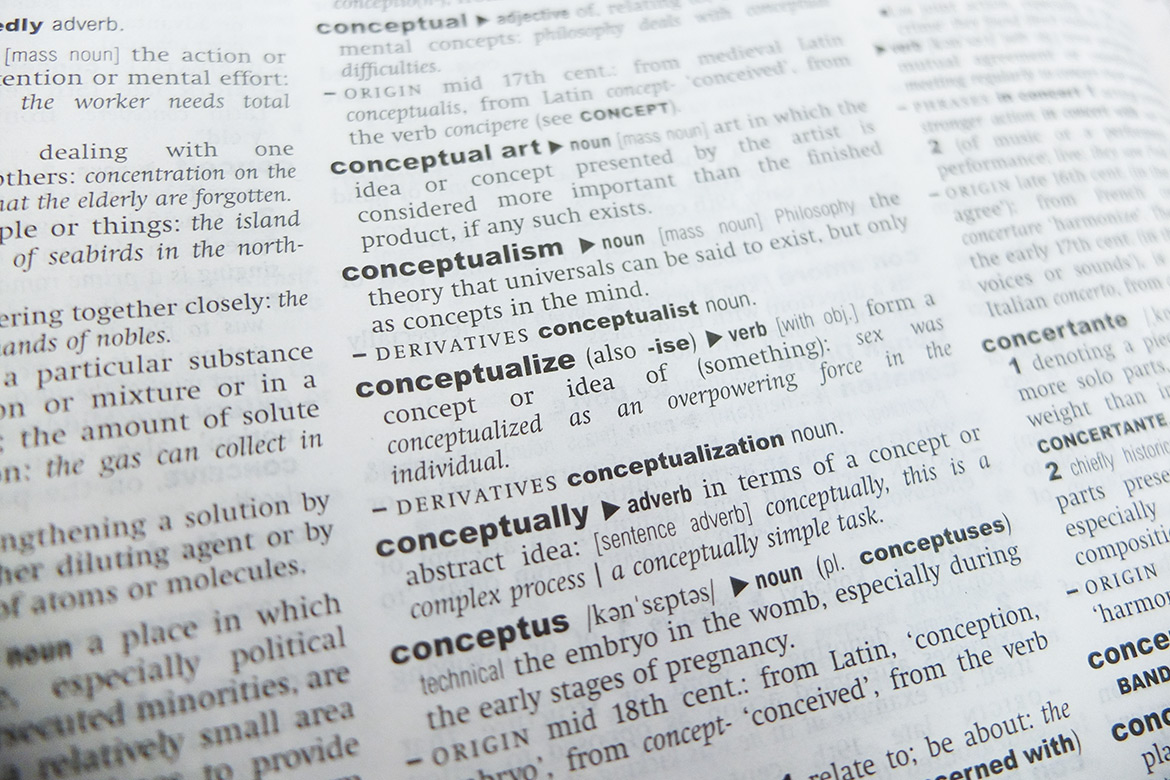

Concepts

Artificial intelligence

AI isn’t magic, but a matter of logic and probabilities. We consider what ‘artificial intelligence’ really means.

Photo: Florian Fisch

“We propose that a two month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College”. This remarkably unspectacular statement in a sketch for a research project was the first time that the concept of AI was mentioned in the science world. The subsequent Dartmouth Conference has since become famous as the founding moment of artificial intelligence itself. The basis of this study, the sketch continues, was “the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it”.

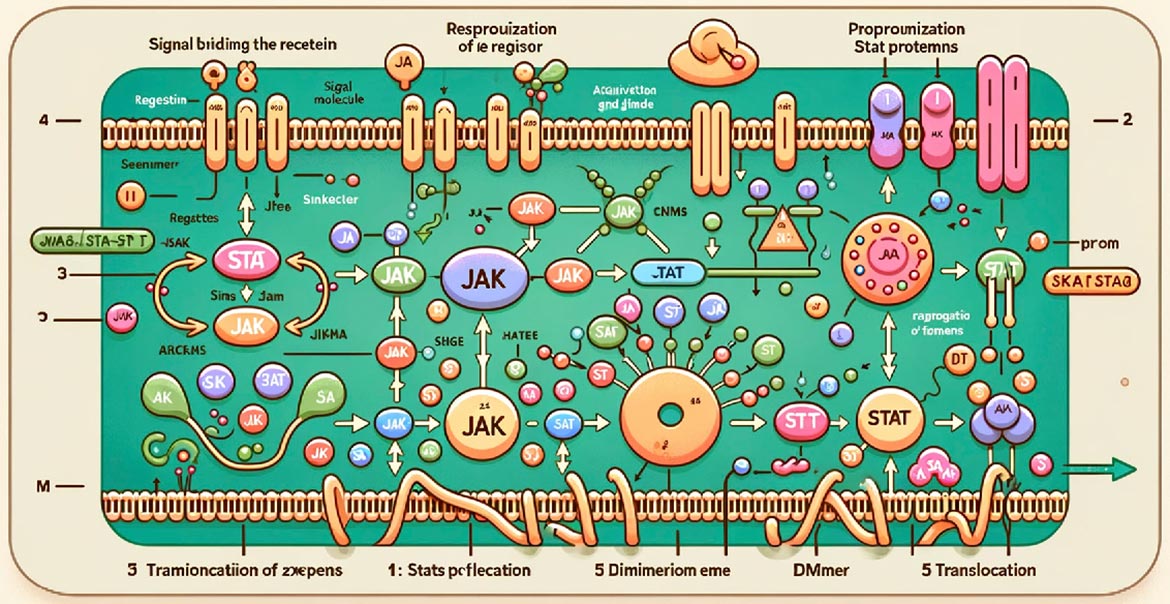

This symbolic form of AI, whose programming would be able to reproduce the rules of logical reasoning, is regarded today as good, old-fashioned artificial intelligence. Meanwhile, the dominant variant is one in which connections are made by calculating probabilities. This involves working with so-called ‘neural networks’ that are recalibrated anew with each fresh input made. ‘Artificial intelligence’ is an overloaded, misleading term for this, says Christophe Kappes, himself a pioneer of digitalisation. And as pointed out by Golo Roden, the CTO of ‘the native web’, current success stories such as image generators or large language models like GPT-4 are based “not on magic, but on systematic processes”.