Infographics: ikonaut

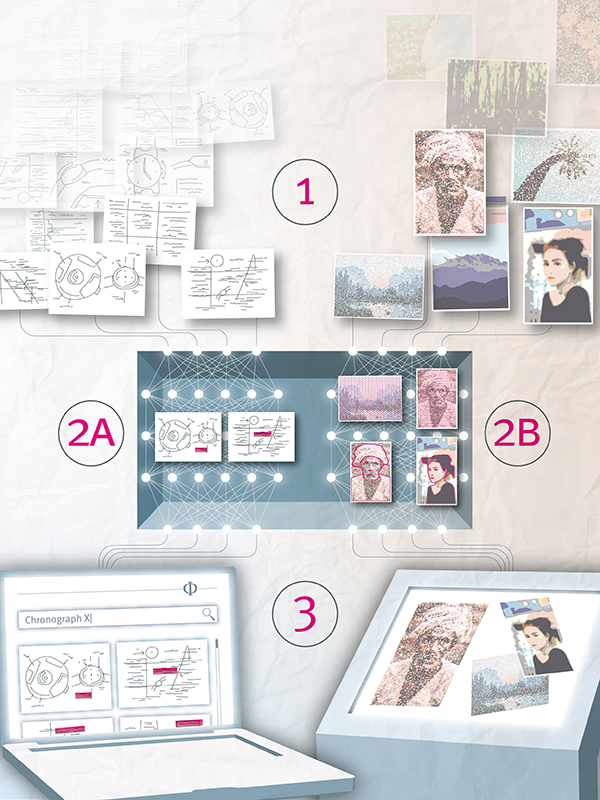

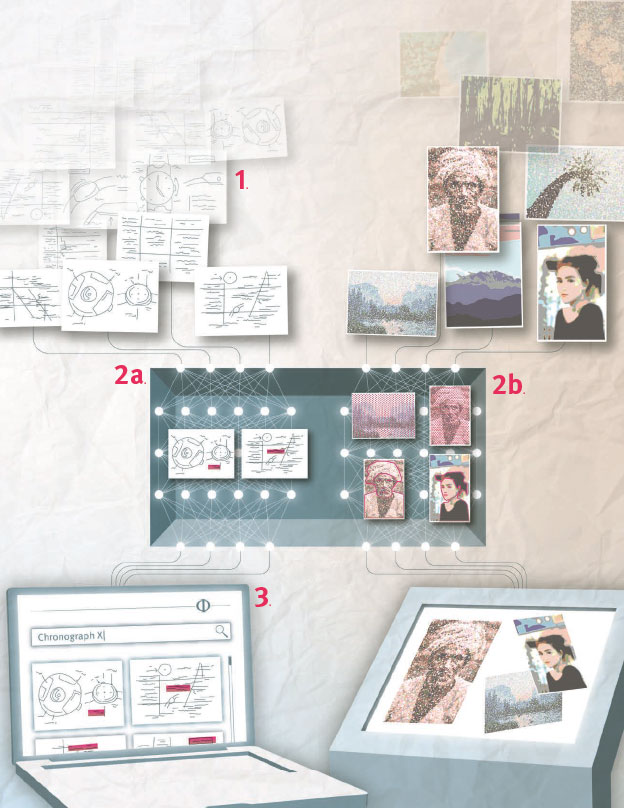

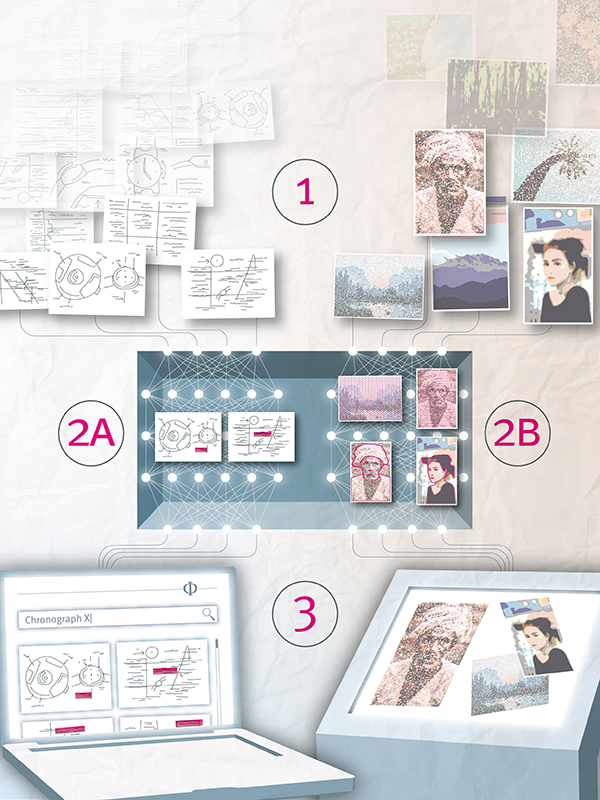

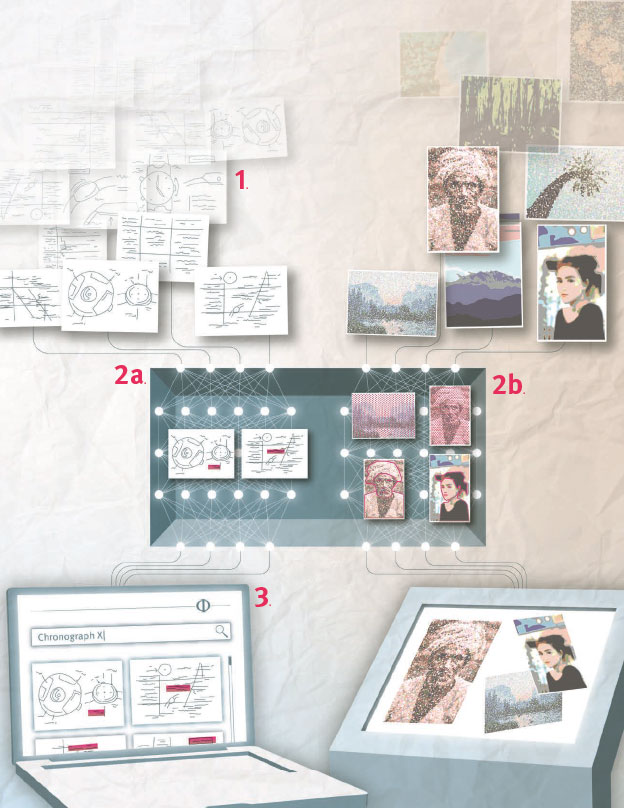

3. The information is at your fingertips

The employees of a watch manufacturer can quickly find people, times, places or objects involved in producing a certain watch. They can do it on their screen, without having to descend into the archives in their basement. And the visitors of an art exhibition can have fun browsing through images that are similar to their favourites that they have just seen.

2b. Find similarities in images

Using big data from the Internet, neural networks have been trained to recognise human faces or cats faithfully. Odoma uses such networks and fine-tunes them to fit the much smaller dataset of their customer. The aim is to recognise visual patterns including colours, lines, styles and themes. The intentional fuzziness of the algorithm allows visitors to search for similar oeuvres visually. The choices they make help to improve the system.

2a. Extract the exact information

Odoma’s algorithm is able to decipher handwriting in different styles, written in different directions, even new text written over old, or text that has been struck through. Artificial intelligence is also utilised to extract information on people, concepts, places and dates, because rule-based systems are quickly overwhelmed by the complexity of the task at hand. Human annotators then double-check low-confidence results, and train the neural network.

1. Scan your material

A manufacturer of luxury watches is contacted by an auction house to check if its archive of handwritten spreadsheets can clarify whether an old model is genuine. And a museum wants to make its whole collection searchable for its visitors. Odoma, an EPFL spin-off, uses artificial neural networks to make thousands, even millions of cultural heritage documents accessible and searchable.