Feature: Evaluating the evaluation

And the winner is . . .

Reading critically, drawing lots or letting the public decide? We look at six ways to decide whose research should be funded. Some of them are well-tried and classical, others unusual and alternative.

An almost fair system under stress

Could research exist without peer review? It’s hard to imagine today. And yet this process hasn’t actually been around that long. Until the early 20th century, when the research community overall was still small, they mostly published what they themselves considered worthy of dissemination. Whether or not you were awarded research funds was more dependent on patronage and nepotism than on any independent process. It was only after the Second World War, when state funding organisations were still in their infancy, that they began to use so-called peer reviews – such as the German Research Foundation (DFG) or the National Science Foundation in the USA.

To be sure, there had already been numerous instances of projects getting funded on the basis of a recommendation from a committee made up of fellow researchers. But it was not until peer review came along that clear criteria and procedures for evaluating research were established. This meant projects could be selected on the basis of their quality and relevance, independent of any personal interests. “That worked very well for a while”, says Stephen Gallo of the American Institute of Biological Sciences, who has been researching into peer-review procedures. He points out a further advantage of the system: if a funding application is rejected, its peer reviewers have to give their reasons, and these usually include recommendations for improving the research project and the application. “This enables younger researchers in particular to undergo a learning process that can benefit them the next time”.

But this system is under stress today. In recent decades, research infrastructure has grown across the world, and with it the number of researchers and projects that want funding. And their numbers, says Gallo, have expanded faster than the money pots of the funding institutions. The logical result of this is that an ever-decreasing proportion of projects are getting funded. “For a long time, the reviewers of research proposals were able to choose between good proposals and inadequate ones. Today, they often have to decide between the exceptional and the excellent”.

Studies have already shown that this in turn increases the risk that such evaluations will become more biased and more conservative. In this environment, evaluators tend to give a higher weighting to a solid methodology than to an innovative approach, and prefer male researchers and those from prominent universities. Funding organisations are trying to mitigate this development by applying different approaches. But Gallo is also convinced that “peer review will also function better as soon as research funding isn’t so scarce any more”.

For everyone who has will be given more

Three million US dollars – that’s the prize money awaiting the winner of Science magazine’s ‘Breakthrough of the Year’ award. Other highly endowed prizes include the Dutch Spinoza Prize, worth EUR 2.5 million, and the Shaw Prize in Hong Kong, worth USD 1.2 million. There are also many more middle-range and small prizes available. Here, too, decisions are made by peer reviewers – the difference being that they’re not assessing the prospects of future projects, but scientific successes that have already been achieved. “Such prize money is meant to give innovative, productive researchers a degree of scientific freedom”, says Gallo.

There are also funding programmes to help people up the career ladder. For these, researchers have to fulfil certain criteria, stating the titles they have already acquired, their gender, age, number of years in research, type of employment at their university and so on.

If funding isn’t tied to a specific project, researchers can take some risks with it. Depending on the situation, this means that they can quickly turn things around if an idea doesn’t work. “This is actually closer to the way research works in reality than is the case with short-term, comparatively rigid project-funding”, says Gallo. But it’s also interesting that the abovementioned prizes achieve more than just giving money to individual researchers. Prize-winning fields attract over 35 percent more new researchers than other fields. They also produce 40 percent more publications.

But the negative aspect of all this, says Gallo, is the fact that it’s incredibly one-sided. The researchers who are selected for the highly endowed prizes and for the popular career programmes are often those who are very productive and have in the past already been successful with their funding applications. So there is an aspect to this kind of research funding that harbours an inherent inequality of opportunity. Those who have succeeded once will do so again – mostly because of their past successes, not because of what they might be achieving today.

The roll of the dice

Fair competition gives everyone an equal chance, doesn’t it? Rather like drawing lots, perhaps? In fact, some funding organisations have indeed started drawing lots when evaluating proposals – including the Swiss National Science Foundation (SNSF). They do so at the point where peer review reaches the limits of its possibilities. This can in theory be positive for research proposals that involve a high degree of creativity. Because when even more applicants are tussling over the limited funds available, peer reviews tend to favour the more conservative among them. To break through this, certain funding organisations have taken to using a lottery. This is the case with the ‘1000 Ideas Programme’ of the Austrian Science Fund. Initially, twelve projects are selected by means of peer review, and then twelve more are drawn by lot from the remaining applications that have been assessed as being of high quality.

These lottery systems are also intended to minimise fundamental bias. “Such bias tends to creep in when applications have been assessed to be of very similar quality”, says James Wilsdon. He has studied lottery systems and is the director of the Research on Research Institute at University College, London. Marco Bieri is a research associate at the SNSF, and he agrees that reviewers’ decisions about different projects are sometimes really only a hair’s breadth apart. “Some applications cannot be distinguished qualitatively from each other merely by means of the evaluation criteria provided”, he says.

Bieri has run a pilot test in which the SNSF tried using a lottery for qualitatively similar cases in its Postdoc.Mobility funding instrument. In 2021, the SNSF became the first research funding organisation in the world to introduce this method for all its instruments. However, this system of drawing lots is by no means applied across the board. “It’s simply one option available when a group of applications are borderline worthy of being funded but cannot be distinguished qualitatively from each other”, says Bieri.

Thus far, 4.5 percent of SNSF project applications have been decided by drawing lots. But does such a partial use of random procedures actually make the overall evaluation system fairer? Wilsdon answers in the affirmative, albeit cautiously: “Yes, initial studies offer at least certain indications that this is the case”.

The idea alone counts

Did you know that 30 percent of the most important work undertaken by Nobel Laureates in medicine and chemistry was not financed by any funding organisation? This is because they were mostly working with ideas that challenged existing views, that couldn’t be backed up with any scientific data at the time, and that used unvalidated methods. “This is high-risk research from the perspective of the funding organisations because it could lead nowhere at all and produce zero results” says Vanja Michel, a research associate at the SNSF.

In recent years, however, various funding organisations have acknowledged the shortcomings of such a stance on their part, and they’ve begun looking for solutions. For example, the National Institutes of Health (NIH) – a major funding organisation in the USA – introduced its ‘Director’s Pioneer Award’ in 2004. It’s worth a generous USD 700,000 annually for five years and is given specifically to young researchers so they can work on high-risk ideas. The applicants don’t have to submit data from any preliminary studies, but they do have to prove that they are exceptionally creative and innovative. The ERC Starting Grant of the European Research Council is a similar instrument.

The SNSF began supporting unconventional research projects in 2019 when it set up its Spark Programme. Applicants only need a doctorate or three years of research experience. Their proposals are evaluated in a double-blind procedure. And it’s not just the evaluators who are anonymised in this case – the same goes for the applicants. Their gender, age and research institution remain unknown to the reviewers. “The only thing that counts is the research idea”, says Michel, who is responsible for the programme. After its first year, it became clear that the majority of applicants were under 40 years old, 70 percent of them didn’t yet have a professorship, and 80 percent were applying for an SNSF grant for the first-ever time. “We’ve also seen that younger researchers are able to compete well against more experienced researchers”, says Michel. The funding offered by the programme is hardly huge – up to CHF 100,000 for a maximum of twelve months – but it can be crucial for providing an initial boost for an idea that might otherwise have come up empty.

Pleasing everyone

T-shirts made of recycled plastic from the sea, observing moose in Alaska, or even therapies for rare bone cancer in children: These are just a few of the scientific projects that are raising money on the Swiss crowdfunding platform Wemakeit – most of them successfully. There’s no doubt about it: in terms of its success rate, crowdfunding is the number-one hit. No less than 65 percent of the scientific crowdfunding projects advertised on Wemakeit succeed in reaching their funding goal. That is a significantly higher success rate than with any traditional funding instruments. In comparison, the success rate at British and American funding organisations is usually between 20 and 30 percent.

It’s also true, however, that crowdfunding projects are often comparatively small. The sums targeted are on average just over CHF 12,000. “A striking number of projects deal with environmental and social sustainability and aim to provide the broader population with better access to science”, says Graziella Luggen, the deputy manager of Wemakeit.

There is indeed a kind of bias in crowdfunding projects, because a topic that’s already well-known and popular will have better chances of success. By contrast, the bigger crowdfunding platforms, such as Kickstarter or Indiegogo, mostly feature technological, product-oriented projects. For traditional research projects, Experiment.com offers a further option to those keen to ask the worldwide public for a financial contribution. This can also work out well. So far, the platform has featured almost 1,200 research projects and they’ve raised a total of over USD 11 million. All crowdfunding options share one thing in common, too: apart from providing funds, they can also be used to build a community.

To sum up: when compared to research funding overall, crowdfunding is a small fish indeed – but it does offer an alternative when you need funds for, say, a sub-project of a larger project, or when you need the starting capital to get a prototype made.

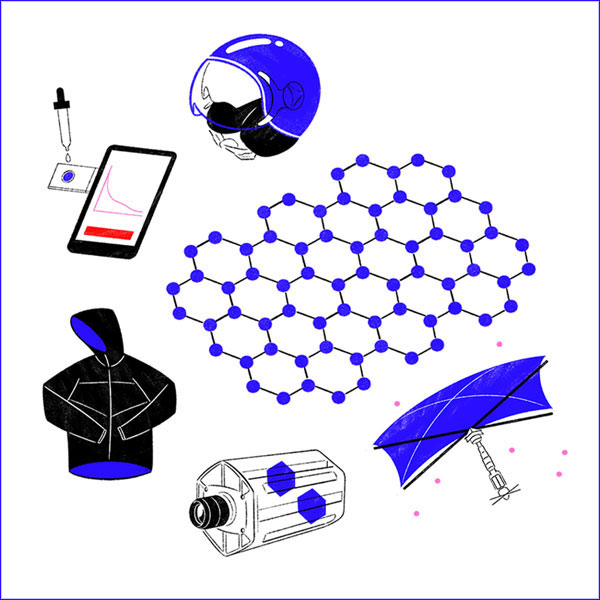

The digital helper

One thing is clear. The traditional peer review process is having to cope with more and more funding applications. So what could be more natural than to appoint artificial intelligence to help out? In fact, you can find many online guides advising reviewers how to use the chatbot ChatGPT to evaluate proposals more efficiently.

This has led to a wide debate on the topic, especially in the USA. “It’s important to maintain confidentiality when evaluating project proposals”, says Stephen Gallo. If you get AI assistance with your evaluations and feed texts from project proposals into ChatGPT to this end, you could be violating your duty of confidentiality. “As far as I’m aware, there have not yet been any investigations into whether or not this is the case, nor into how a chatbot’s assistance might affect the evaluation itself. It would be exciting to find out”. Meanwhile, the National Institutes of Health in the USA have banned their reviewers from using the chatbot. Other funding organisations have also issued guidelines on this.

It’s different, however, if funding organisations use AI internally without sharing any data. Several of them are now experimenting with AI at various stages in the peer review process. Some use AI to weed out the worst proposals, while others utilise it to identify suitable experts for peer review by means of keyword searches in proposals and publications. This also applies to the SNSF’s Spark Programme.

However, as the Programme head Vanja Michel explains, the Spark team checks the AI’s decisions in every case and often has to overrule it. Other organisations, such as the German Research Foundation (DFG), are currently preparing to use AI. The DFG is setting up a pilot project to evaluate the extent to which it might want to use a digital assistant for checking application documents, for example, or application authorisations. The National Science Foundation in the USA, which is a major funding organisation, isn’t yet using AI, but aims to have a strategy for it in place by the end of the year. However, no one in the funding business as yet sees any serious possibility that intelligent tools might already be able to evaluate applications on their own.

Illustrations: Arbnore Toska