Large language models

A new AI can generate correct hypotheses

Google recently presented a new AI system whose research hypothesis has since been demonstrated experimentally.

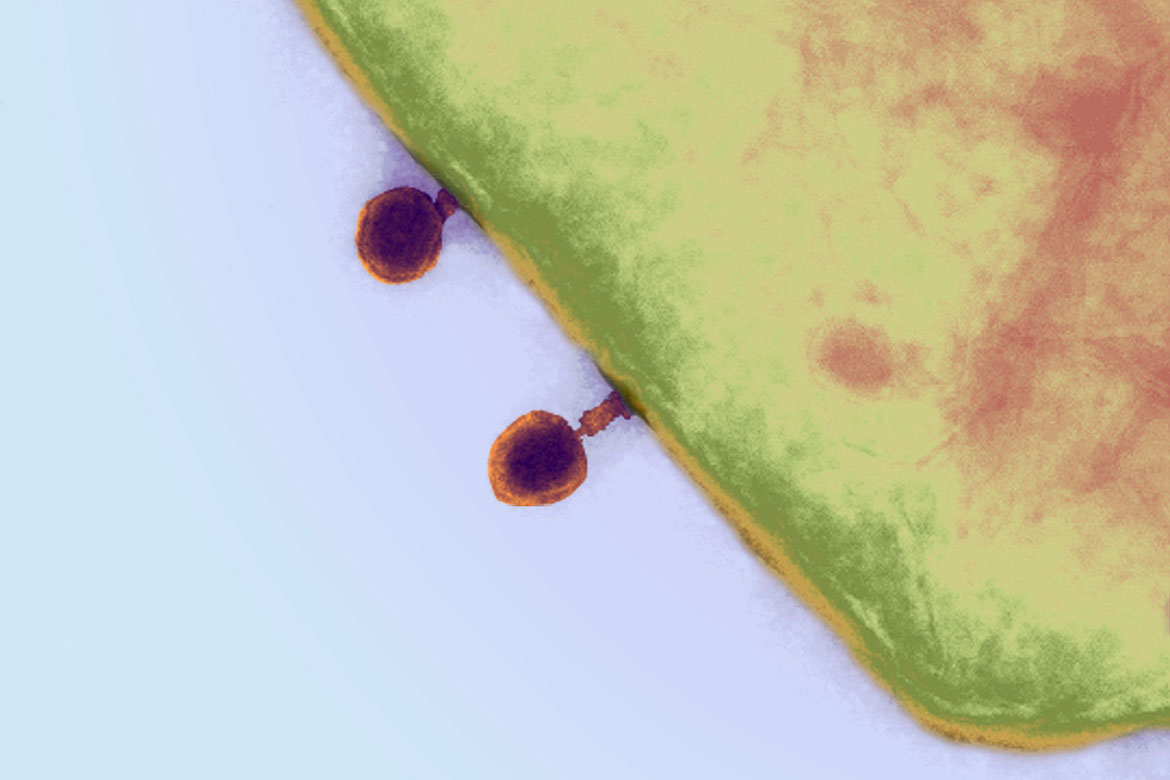

Viruses, coloured dark red in this image from an electron microscope, inject their DNA into the much larger yellow bacterium using their tail. | Photo: Science Photo Library / Keystone

Already back in 2008, the laboratory robots Adam and Eve were testing new active substances in yeast cells, evaluating the results and deciding of their own accord what substance should be tried out next. Now Google wants to take things up a notch and generate hypotheses for all manner of research questions. In spring 2025, they accordingly announced the launch of their new AI system, ‘Co-Scientist’.

To test their AI, Google took up an old research question about viruses that infect bacteria. Normally, these viruses have a tail that they use to inject their DNA to this end. But some viruses manage to do this without a tail. Until recently, it was unknown how they did this. Tiago Dias da Costa is a researcher at Imperial College London who solved this mystery shortly before Co-Scientist was asked to do so. He has since told the BBC how impressed he is by the AI’s results: “We were very surprised because the main hypothesis was a mirror of the experimental results that we have obtained and took us several years to reach”. The results of his study, which showed how these viruses use the tails of other viruses, had been withheld until publication due to a patent pending. So Co-Scientist could have known nothing of it.

The Co-Scientist system comprises several language models that interact with each other and are specialised in performing different tasks – e.g., generating and assessing ideas, or setting up a ranking list. The models in question had only been given da Costa’s research question, his introduction and his list of references. Maria Liakata is a professor of natural language processing at Queen Mary University in London, and she isn’t surprised that an AI can combine extant information to create new knowledge. However, she also urges caution. The Google study in question “is more of a showcase paper than actually revealing a lot of the technical details”, she says. “But the fact that [this study] is so resource-intensive means that it’s not something readily replicable by scientists, [and] they would have to be working with Google in order to do it”.