REPRODUCIBILE SCIENCE

Going beyond statistical significance

Medical and social scientists try hard to reduce harmful bias in published results. They could look to physics for inspiration.

What’s more important than analysing numbers is to have a clear idea of what you expect, claim some science sociologists. | Illustration: zVg

Publishing experimental results only when they reveal something new and exciting about nature seems anathema to the idea of science as a disinterested pursuit of truth. But from clinical medicine to economics and sociology to psychology, scientists often do just that – writing up their results and submitting them to a journal only when they think they’ve identified a ‘positive’ effect. This leaves some or all of their null results in their metaphorical file drawer.

The upshot of this bias in publications is a skewed view of scientific knowledge as a whole, with the artificial prevalence of positive findings distorting the multi-study analyses that many fields rely upon. This bias also leads to wasted effort when ‘negative’ studies go unreported.

What’s more, many of the data that do get published are often questionable, given the use of a range of statistical techniques to extract a signal from a sea of noise when in all likelihood no such signal exists. This generation of false positives misleads scientists and the wider public, and is a particular problem in medicine, with many purported treatments turning out to be duds.

Some scientists have tried to tackle these problems by pushing for more transparency when publishing data and methods, particularly through the process of pre-registration. This involves publishers recording study designs and statistical techniques before any data have been collected, and then publishing results regardless of whether or not they yield exciting novelties.

However, some experts reckon pre-registration can thwart the freedom needed to make new discoveries, while others propose more fundamental changes. Harry Collins, a sociologist of science at Cardiff University, UK, argues that medical and social scientists could learn from the way physicists use statistics and also non-statistical physical intuition. “The solution to this bias lies not with publishers but with the way science is done by scientists”, he says.

Inform everyone about your plans

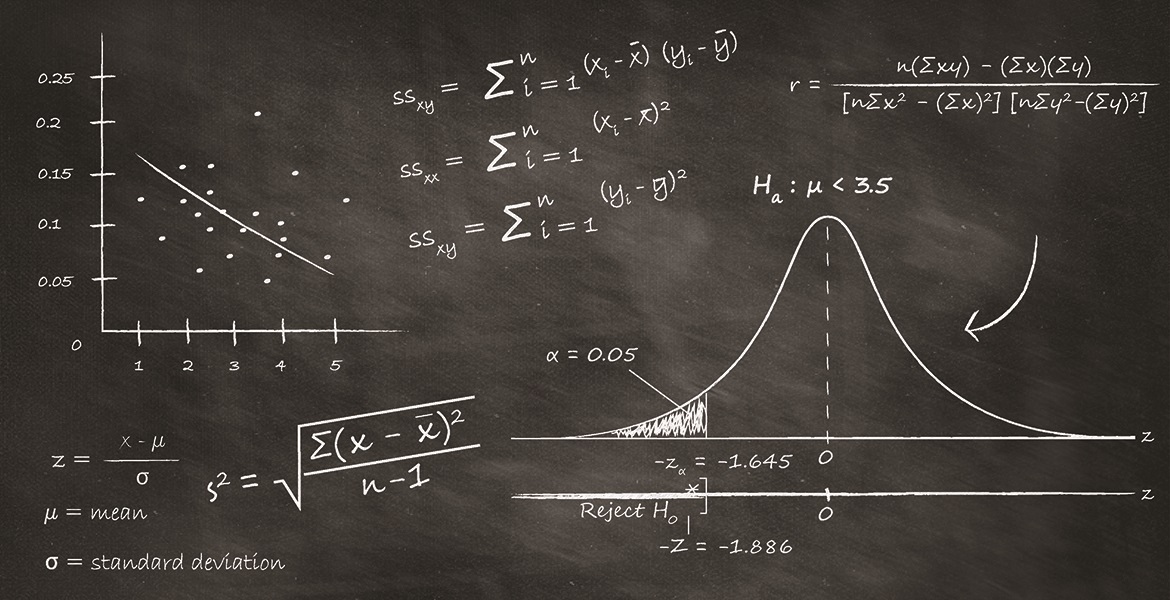

The problem of different biases in scientific research has been discussed for many years. Back in 2005, John Ioannidis, a medical scientist and epidemiologist at Stanford University, US, argued that most published findings in medicine were likely false, after he analysed researchers’ use of p-values – the chances, as he puts it, of any given signal arising when actually no true signal exists. He reckoned that by trawling large data sets and setting the p-value at five percent, researchers were almost certainly generating huge numbers of bogus effects.

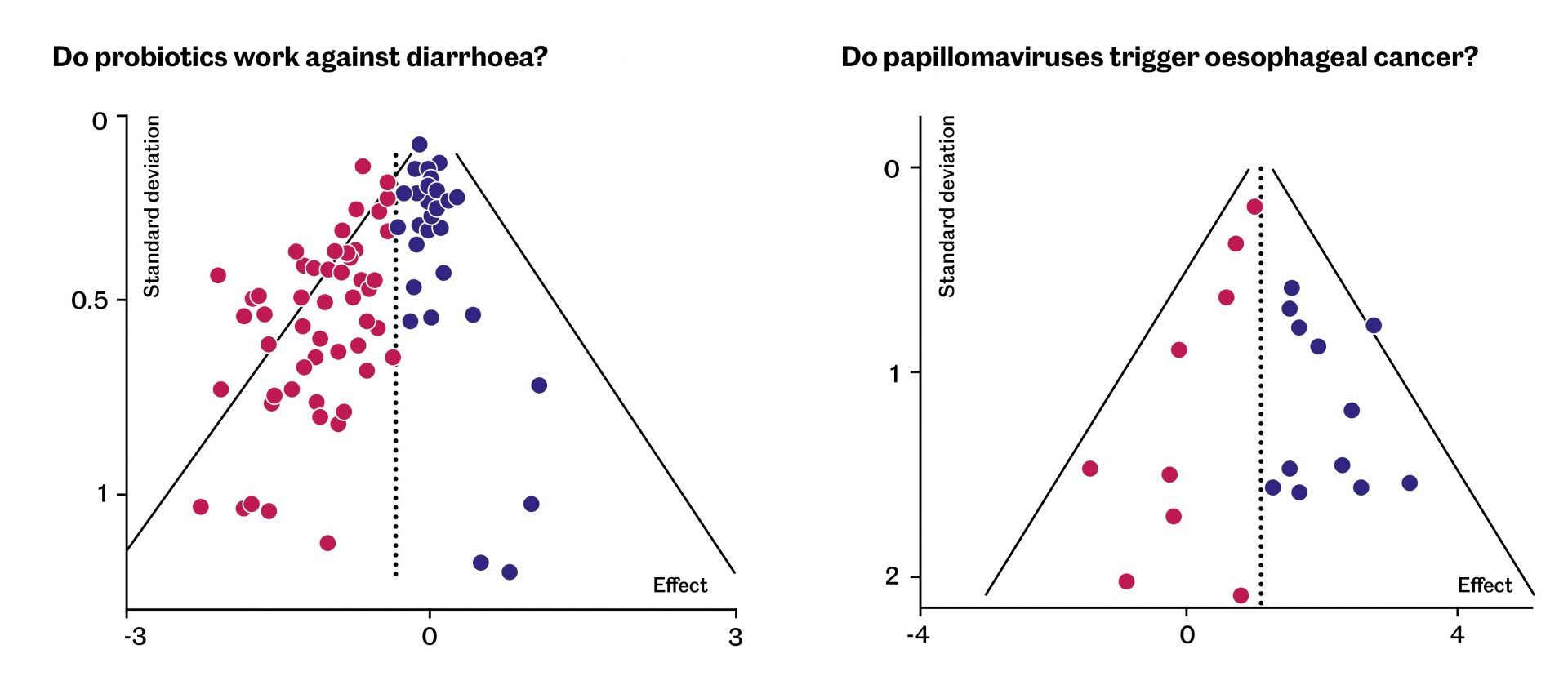

A visual assessment of the state of studies. In these funnel charts, popularised by the Bernese epidemiologist Matthias Egger, the small-scale studies are at the bottom and are scattered broadly around the average (the dotted line). The large-scale, precise studies are at the top. Right: the small-scale studies already point to the cause of the illness. The situation is balanced. Left: of the small-scale studies, presumably only those were published that could prove that the therapy was effective. The larger the studies, the clearer the effect was proved to be deceptive. Illustrations: Bodara, after M. L. Ritchie et al. 2012 (left) and S. S. Liyanage et al. 2013 (right).

Among the areas most criticised for bias have been medical trials. There is some evidence that bias in recent years has been diminishing. One study in 2015 by the US Agency for Healthcare Research and Quality found that trials of cardiovascular treatments yielded more positive results before the year 2000 than they did afterwards. But there have also been some very high-profile failures, such the pharmaceutical company Roche withholding information about its Tamiflu antiviral drug.

Psychologists have also been struggling to make their research more reliable, after having discovered that many headline results from the 1950s onwards simply cannot be replicated. This field in fact was the first to introduce registration to journal publishing, and the practice has since spread to other fields, including medicine.

Ioannidis reckons that pre-registration has helped to make the file-drawer problem less common in science overall. But he thinks the problem of cherry-picking interesting data has got worse, with pre-registration unable to remove all the ‘degrees of freedom’ open to resourceful, would-be statisticians. “We now have more data, more complex tools, more faculty, and scientists with little training”, he says. “In these circumstances it is much easier to generate signals, many of which will not hold up”.

Treat animals like patients

One area where publication bias creates a particular problem is animal testing. The upcoming referendum on the proposal that such testing should be largely banned in Switzerland has led to dismay among academics. Swissuniversities, the umbrella organisation of Swiss higher education institutions, has argued that animal experiments are necessary for developing new drugs, pointing out that strict regulations require researchers to respect the dignity and wellbeing of animals and to get approval by cantonal veterinary offices.

However, according to Hanno Würbel at the University of Bern, many proposed animal experiments lack measures to counteract bias, with perhaps as many as 30 percent of studies remaining unpublished. This, he argues, is ethically unacceptable. “Every animal experiment is authorised on the understanding that it will produce important new knowledge”, he says.

Like others, Würbel reckons pre-registration is key to reducing this bias. But he acknowledges that some scientists worry this will limit their freedom to legitimately adjust methods or hypotheses in fast-moving areas of research. One potential way around this, he suggests, would be to distinguish between exploratory and confirmatory research.

In contrast, Daniele Fanelli, a methodologist and expert on research integrity at the London School of Economics, UK, argues that a bit of publication bias can actually be a good thing, as it helps overcome what is known as the ‘cluttered office problem’: the inability to identify interesting positive findings if they are swamped by null results. But he says that the trade-off between this and the problem of a bulging file drawer “varies between fields”.

Know your gravitational wave

In fact, Fanelli supports the old idea of a ‘hierarchy of the sciences’, within which, he maintains, disciplines can be distinguished according to the extent to which data ‘speak for themselves’ and theories can be rigorously tested. He made this argument in a 2010 analysis of nearly 2,500 papers from across science that reported having tested a hypothesis. He found that social scientists – at the bottom of the hierarchy – were on average much more prone to report positive findings than physical scientists.

Collins too believes it is crucial to distinguish between fields. He points out that physicists have developed very rigorous standards when it comes to interpreting statistics – placing the threshold for discovery at ‘five-sigma’, meaning just a one in 3.5 million chance that a signal is a fluke. But he notes that in the case of the 2015 discovery of gravitational waves, it wasn’t actually the statistics that persuaded researchers they had hit the jackpot. What really mattered, he maintains, was a conviction that the wave-shaped signals recorded by their detectors matched what they expected from a pair of merging black holes.

If social and biological scientists really want to overcome their biases and publish results that can be replicated, Collins argues, they need to look beyond their p-values and try to gain an intuitive sense of what is going on in their experiments. “People often don’t understand what statistics are for”, he says. “They are often an excuse not to do any real science”.

Ioannidis agrees that researchers must abandon their fixation with p-values, but offers a more direct solution. He argues that administrators need to impose certain rules from above, such as in some cases stipulating pre-registration, while individual researchers should be educated on the need for rigour. “Well-trained scientists realise this is integral to good science”, he says, “but they have to be trained”.